3 AI tools to reduce qualitative data analysis time by >50%

..and analyzing interview data for product managers, market researchers, consumer insights professionals and researchers

Two dear friends of mine brought up the same question a week ago -

How can I use AI to save time on analyzing qualitative data?

Of course, I took that as a sign from the AI Gods to write this article.

To be clear, they were both referring to analysis of qualitative data gathered through interviews. I will focus on this narrow piece of research methods and techniques for this article.

AI tools built on the foundation of LLMs (large language models) are best suited for working with text data and completing tasks such as summarization and synthesis.

I’m going to recommend three AI tools that you can consider using as you tackle your interview data. These are not the only tools that can support this type of project. But, they are the ones that I have vetted and I know can deliver the goods!

Collecting interview data

Research interviews are used by many groups such as product managers, market researchers, consumer insights leads and graduate students toiling away at their dissertations to draw valuable insights.

At a high-level, the process from cradle-to-conclusions goes in these stages -

Define research question → Plan and organize interviews → Conduct Interviews → Analyze Data → Present Results.

In my opinion, the most time consuming and tedious parts of the process are the ones in bold words and include tasks such as

Transcribing interviews

Coding and analyzing the content in interview data and

Creating presentations to share results

There are some things money can’t buy; for these three tasks, there are AI tools (sorry, Mastercard ad creators - I borrowed part of your lines)!

The Tools

1. Transcribing interviews with ElevenLabs

The first time ever that I transcribed interviews was as a graduate student. This was almost 20 years ago (great! I just dated myself) and at a time when there were very few tools available. The ones that did exist barely got the job done. Thus, I spent several weeks and months mostly manually transcribing the data with many stops, restarts and repeats to the recordings.

Since then, technology has improved the process a great deal. But, accuracy of transcribed data has continued to be an issue. You still need to review and correct the transcript, which can be time consuming.

Today, one of the tools that can 1. reduce total transcription time to mere minutes and 2. do it more accurately than many others on the market, is the speech-to-text tool, Scribe which is developed by ElevenLabs.

→ Time saved ~ 60%

What distinguishes this tool from others in the market is the quality and flexibility. Scribe helps you transcribe any speech, be it interviews or any other conversation or monologue. It can also identify multiple speakers. ElevenLabs claims that it is the most accurate model that exists and can transcribe speech in 99 languages.

That explains its popularity among users. As of March 2025, it was ranked #11 in the top 50 list of Gen AI Web Products based on unique monthly visits.

ok, so now you have your accurate transcript ready. The next step is to analyze those transcripts.

2. Analyzing content with Claude

Choosing the content-analysis tool

You can use ChatGPT for this part of the process if that is the tool you are most familiar with.

I prefer Anthropic’s Claude for a few reasons. It does not save my questions and conversations past that event or a short time, does not save any documents that I’ve uploaded and does not use my conversations to train its models. This gives me a sense of control over how my information is used. For that reason, I also consider it a safe place to upload documents if I need to. It is only a personal preference.

Read this from the bot itself -

And here’s a related quote from Anthropic which built the Chatbot Claude, “Anthropic has made it as transparent as possible that it will never use a user's prompts to train its models unless the user's conversation has been flagged for Trust & Safety review, explicitly reported the materials, or explicitly opted into training. Furthermore, Anthropic has never automatically used user data to train its models.”

Many Chatbots use inputs from users like you and me to train and improve its responses. While that may not bother some folks, there are others who consider it a violation of their privacy even if no personally identifiable information is included.

Writing prompts to analyze your data

Regardless of which tool you use for this portion of the process, you would need to write text prompts to get your desired output.

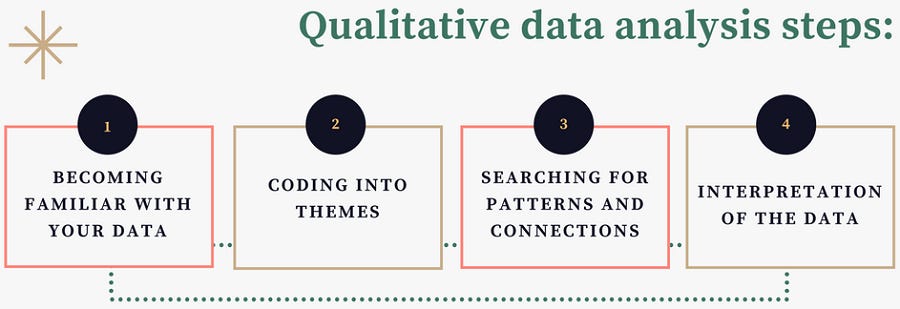

Most researchers analyze interview content (i.e. text dataset) by identifying common themes that emerge and then coding the text dataset with those themes. The themes are usually narrow but that would vary by researcher and purpose.

There are two ways in which you can analyze your text data using Claude or your chosen tool.

1. The open-ended way

You can ask the AI tool to identify common themes in your data. In the next step, you ask it to get more granular with those themes.

This will create categories and sub-categories. You can also ask the tool to code the text using that categorization, put those details in a table, create timestamps, include quotes and format the results in a specific way.

With this blue sky approach, you are allowing the tool to find the patterns without providing any guidance. If you are just exploring your text data, this might reveal some interesting insights you haven’t considered.

2. The scientific way (recommended)

Most of the popular Chatbots available right now have general knowledge sourced from public sources. The models are not trained on or built for domain-specific knowledge such as criminal law or oncology.

The best way to use AI tools in their current state of imperfection to is have a human-in-the-loop. Researchers should ideally review the transcripts, develop their own domain-specific categories and codes based on the research goals, questions and hypotheses. Then, use the tools to expand on those and identify any missing or outlier themes.

→ Time saved ~50%

Regardless of the method you choose, you would need to get adept at prompt engineering.

I came across a very useful checklist from Harvard University on constructing good prompts. Check out the details here but the key points are below.

Be clear and specific about the results you are looking for and the format for the output (e.g. table, paragraph, calendar)

Keep refining your prompts to get narrower and hone in on the answer you want. The more granular you get, the better the results.

Give it context such as a location (research universities with art schools in Manhattan) or a personality (‘act as if you are my doctor’ when answering what I should cook today with eggs) or a target audience (make a list of five live action movies with Ryan Reynolds that are kid friendly)

Set boundaries and limits represented by numbers or ‘do’ and ‘don’t’ (e.g. give me three strength training exercises that don’t involve kettle bell weights)

Make it interactive - ask it to help you write a better prompt to get to your answer (counterintuitive, I know), give it feedback and correct it as needed

Be mindful of the limitations

You’ve probably heard of this one before - AI hallucinations! Hallucinations refer to the fact that your Chatbot not only gives incorrect answers to some questions but also makes up answers - i.e. hallucinates!

It can be difficult for a user to distinguish between a true or false response. There are technical reasons for this related to how the programs are built. We won’t get into those here but pretty much every Chatbot suffers from them to some degree.

There is no way to prevent hallucinations for now. But, we can try to work around them by using these tactics.

Fact checking and verifying results through external sources

Using the prompts writing suggestions mentioned above such as clear, specific prompts, making-it-think, providing context and others on the list. Also provide one prompt at a time and then narrow it down like peeling the layers of an onion. That keeps the Chatbot focused on one single request at a time instead of burdening it with a complex task all at once

Ask it to confirm its own results (this one can get tricky although it is worth a shot. Microsoft’s Bing Chatbot got belligerent in a well covered case in which it refused to acknowledge that it had got some information wrong and became offensive to the user)

3. Creating presentations to share results with Gamma

Presumably, you also used Claude to summarize the results and list the takeaways after your content analysis in the step above. Now to share these through presentations.

The market for presentation tools is dominated by Microsoft’s PowerPoint and Google’s Slides. To use these tools, you need a moderate level of design skills. Even if you eventually master enough of those to get a reasonably appealing slide deck out the gate, there is limited flexibility with sizing and other visuals. Over the years, both PowerPoint and Google Slides have added more features, including AI ones, and made the process less frustrating (personal opinion here). But, it still takes a lot of time.

Recently, I started using Gamma. It provides AI-powered tools to create presentations, websites, documents and social media content in minutes. Saves you hours and days of time while also giving you the flexibility and chops to make visually appealing creations. You can create any of these using text prompts or by uploading your own content.

Once you have your content analyzed as in point #2 above, you can use Gamma to create a presentation in half the time it takes to make one in any of the market maker tools. As of March 2025, Gamma was ranked #16 in the top 50 list of Gen AI Web Products based on unique monthly visits.

→ Time saved ~50%

To get a detailed account of how Gamma works including a simple demo, see the article below that appeared in this newsletter some weeks ago.

Before using these AI tools…..

Know the process before you economize it with AI

We should all be learning how to use AI to become more productive and efficient. However, it is extremely important to know how to complete that process successfully without the help of AI. That will allow you to competently review and spot any mistakes that AI might have made. Because those systems are not yet perfect and prone to errors.

In other words, learn and know how to change a flat tire yourself (metaphorically speaking) before letting AI generate the step-by-step process for you.

Human in the loop

For now, the optimal way to employ AI tools for your benefit is by collaborating with the tool rather than letting it work independently. This entails providing guidance and feedback to the tool and teaching it through text prompts. This way, you can get more reliable results with adequate quality control provided by the human.

Note: This is not a sponsored post or ad. In some cases, such as ElevenLabs, we may earn an affiliate commission at no extra cost to you if you make a purchase through its link.

This is a great overview of how AI is impacting qualitative data analysis! It's exciting to see tools like ElevenLabs and Claude being used to streamline the process. The point about prompt engineering is spot on - the more specific you are, the better the results you'll get. At Solid we're trying to help people be more specific with the prompts they give us.

I especially appreciated the emphasis on the 'human-in-the-loop' approach. While AI can automate many tasks, it's crucial to have a researcher's expertise to guide the analysis and ensure the insights are accurate and relevant.

It's also a great callout to be aware of AI hallucinations. Fact-checking and cross-validation are essential when working with these tools. Thanks for sharing these practical tips and tool recommendations.